New LLM Optimization Technique Redefines Memory Efficiency

Last updated: 2024-12-17

In the ever-evolving world of artificial intelligence, the efficiency and scalability of deep learning models is a hot topic. Recently, a breakthrough optimization technique has emerged, capturing the attention of developers and researchers alike. Shared on Hacker News, the story titled "New LLM optimization technique slashes memory costs" reveals how this innovation could drastically change the landscape for Large Language Models (LLMs) and their applications.

Understanding LLMs and Their Challenges

Large Language Models, such as GPT-4, have transformed the way we interact with technology, allowing for unprecedented natural language understanding and generation. However, their deployment is often hampered by their substantial memory requirements. LLMs typically demand huge amounts of RAM and computational power, making them less accessible for developers working on smaller projects or in resource-constrained environments.

As these models continue to grow in size and complexity, the necessity for innovative optimization techniques becomes increasingly pressing. The ability to democratize AI technology hinges not just on improving model performance but also on reducing the overhead associated with these complex systems.

Overview of the New Optimization Technique

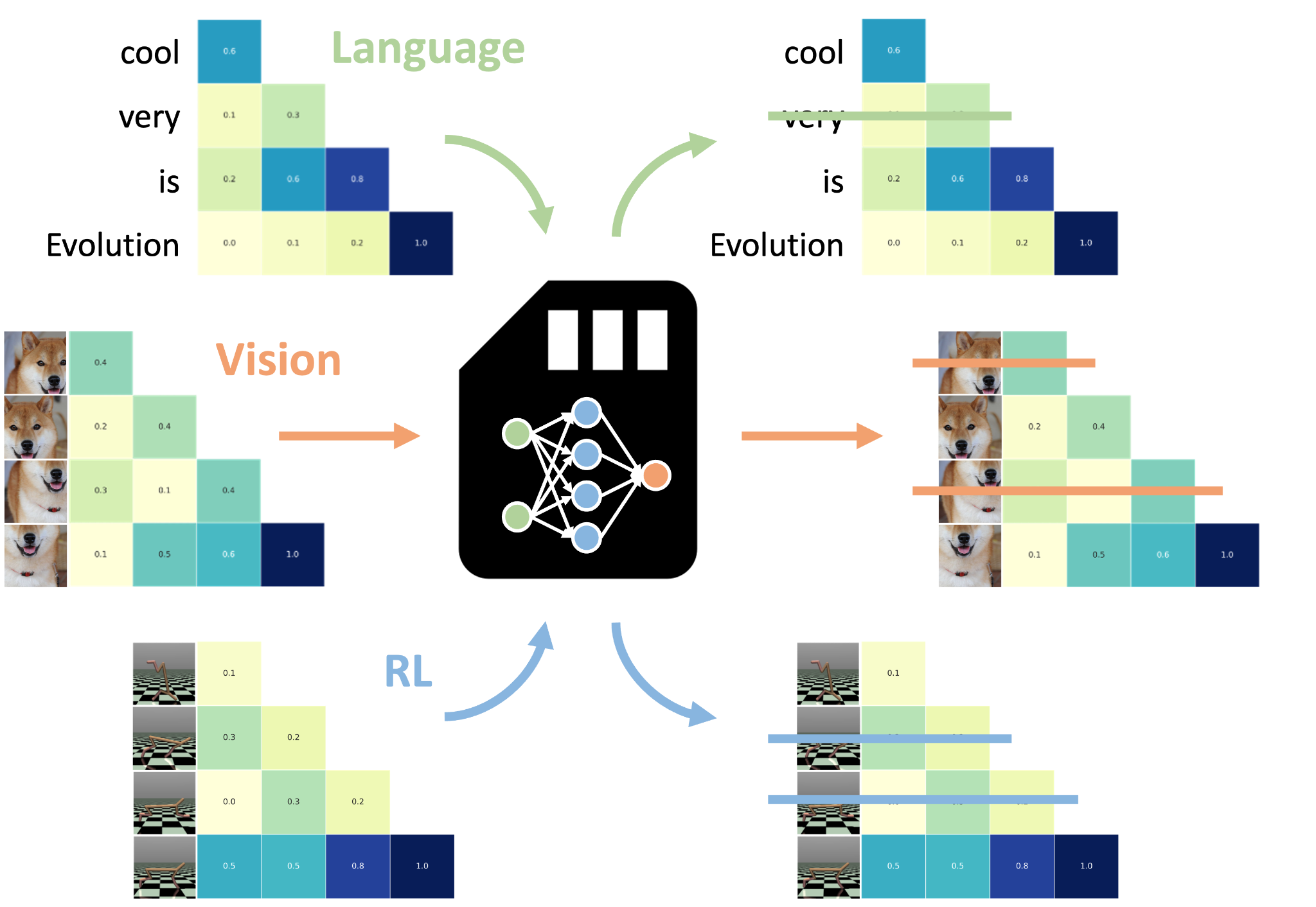

The recently introduced optimization technique holds the promise of significantly slashing these memory costs. Although details on the methodology may be complex, the essence lies in improved data handling and an innovative approach to model parameter management. By optimizing how models access and utilize their stored data during training and inference, this technique can lead to a much leaner operational footprint.

Key Features of the Technique

The method innovatively reduces memory usage while maintaining or even improving performance metrics such as speed and accuracy. Here are some key features of this optimization:

- Layer-wise Memory Optimization: This feature allows for dynamic memory allocation based on the model's real-time needs, which varies throughout different stages of processing.

- Lazy Loading of Parameters: Instead of loading all parameters at once, this technique loads only what's necessary when it's required, greatly reducing the instantaneous memory footprint.

- Revised Gradient Computation: The technique incorporates forward and backward pass optimizations in model learning, thus enhancing overall training efficiency with lower memory consumption.

Measurable Impacts on Memory Costs

Initial tests of this technique reveal transformative capabilities. Reports indicate reductions in memory usage by as much as 50% without significant sacrifices in performance. This is pivotal for researchers and practitioners who often struggle with deploying LLMs due to hardware limitations.

Furthermore, the implications of these reductions go beyond just memory savings. Faster processing times imply reduced power consumption, which aligns well with the growing demand for sustainable AI practices. As environmental concerns take center stage in technology development, this optimization technique could pave the way for more environmentally friendly AI operations.

Implications for Developers and Businesses

For developers, the ability to deploy LLMs with significantly lower resource requirements means opportunities for greater creativity and experimentation. Smaller teams can now afford powerful models, opening the door for more innovation across various sectors, including education, healthcare, and entertainment.

For businesses, implementing these optimized models translates to cost savings. Less reliance on powerful hardware reduces operational expenditure, while quicker processing capabilities increase throughput and productivity.

The Future of LLM Optimization

The introduction of this novel optimization technique does not just signify a step forward in memory efficiency; it heralds a new chapter in the evolution of LLMs. As AI technology continues to advance, the ability to craft models that are not only powerful but also efficient is crucial for sustainable growth in this field.

Future research will likely focus on refining these methods and exploring additional optimizations that could further enhance performance. Collaborations amongst researchers, developers, and industry leaders will be vital to driving forward these innovations.

Community Response and Further Developments

The Hacker News thread accompanying the article has ignited discussions among industry professionals, academic researchers, and AI enthusiasts. Suggestions, shared experiences, and theoretical explorations abound in the comments. Many users expressed excitement for the potential applications of this optimization technique while others discussed their experiences with existing memory challenges in deploying LLMs.

This community feedback is invaluable, providing insights that could shape the next stages of research and application for these technologies. It emphasizes the importance of collective knowledge and the role of collaborative practices in technological advancement.

Standing on the Brink of AI's Next Phase

The emergence of a new optimization technique for Large Language Models promises to make AI more efficient, accessible, and impactful. By addressing memory costs, this innovation not only enhances the performance of these models but also paves the way for broader use cases across industries. As we stand on the brink of AI's next phase, it's clear that continuous refinement and collaborative efforts will be key in realizing the full potential of these technologies.